Quality Assurance Is How We Roll

These days, a lot of companies approach QA as “Quality Assistance”, instead of going for the more traditional “Quality Assurance” interpretation. The idea is that QA usually only finds the issues, but is in no way responsible for fixing them. While this is true (in most cases), I believe this phrasing implies that QA lacks ownership over the state of the game:

It’s easier to say

“we found the issue, but because the Devs didn’t fix it, we now have to hotfix”

than to admit

“we found the issue, and it’s our fault that we didn’t push it enough. Now we have to hotfix because we underestimated the bug”.

Calling it “Quality Assistance” moves ownership over the quality of the game to the implementing members of the game team. “Quality Assurance” implies that ownership stays with the QA Team — we’re here to find everything that bugs the player, and we’re accountable when things fail.

While I could write a whole blog post about this topic alone, simply taking ownership over the game’s quality is a key factor in improving QA Processes. A “we let it slip through, how can we get better?”-mentality will help to push a QA team to a great performance every sprint — the “A” is for Assurance, not Assistance.

Our Processes

Efficiency and result-driven testing are key components in our processes: we are not looking for a perfectly bug-free product, we are looking for a product where bugs don’t impact the player’s experience.

As a team, we’ve run more than 30 hotfix-free updates in 2019 on Idle Factory Tycoon. To keep up with that speed, our QA Team is using a very special testing approach to be fast, efficient, and lean.

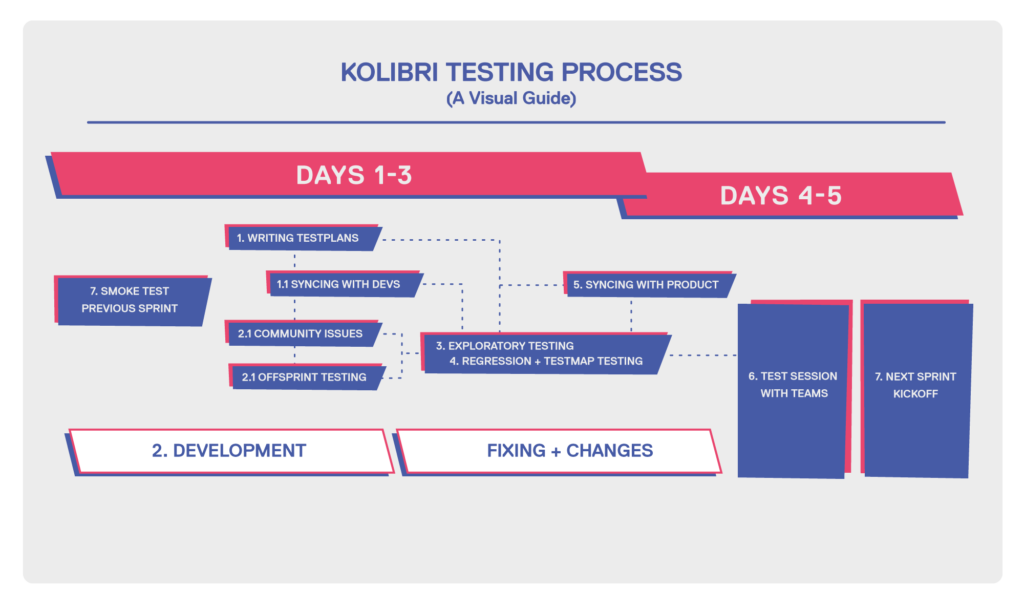

Visualized (Development is simplified to highlight the QA steps)

The approach is simple:

- The sprint starts. We write Happy Path test plans and include some cases for the edge cases while the Developers are working on the feature. At this stage, we already sync with Development, Design, and Product about missed edge cases, cheat requirements, weaknesses in the design, or forgotten aspects of the feature. Finding issues as early as possible (best before implementation) is the key to a fast cycle.

- After one to five days of development, the code gets reviewed, tested on a device, and merged to our testing branch. QA does not test on feature branches. Meanwhile, QA follows up on player reports, works on test plans, reviews them, and takes care of sprint-unrelated tasks like performance testing and compatibility testing.

- The feature hits our testing branch. Exploratory testing starts and usually, bugs hit the Dev Team.

- After completion of the first exploratory round of testing, the testers regression test the bug tickets and work their way through the test plan.

- Next, the feature gets a playtest by the Designer or Product Manager, to make sure the implementation fits the vision.

- The last point of the active sprint is a test session. The game team gets together and tests the game based on testing guidelines provided by QA. If issues are found, we do a quick sync whether the risk is worth fixing them, or if the version is ok to be shipped.

- The last action of the QA in a Sprint is smoke testing the release builds. A quick look over key features (i.e. purchases, tutorial, Cloud Save, you know it…) ensuring that everything is ready for rollout and the version gets pushed out with the Product Manager pressing the magic button. While this is happening, the rest of the team is already working on the next sprint.

This whole process usually takes five days. We don’t have an army of testers (at the time of writing, the QA Team on Idle Factory Tycoon is 2 People), but thanks to a testing oriented production team and an incredibly fast development process, QA has enough time to do the required testing for a smooth and bugfree player experience.

Test Plans — Stay Lean, Stick to the Key Points

We don’t have a dedicated QA Lead to write our test cases. Just like our processes, we keep our test cases as lean as possible:

When the sprint starts, feature test plans are written by a QA Feature Owner. They are kept short and focused on the key points of the feature. That means removing unnecessary cases that take a lot of time without having an impact on the product, like extensive compatibility tests for example.

Thanks to our great QA Google Sheets Specialists, we don’t need a QA Tool to manage our test plans. Scripts and Workflows are automated and specific to our games.

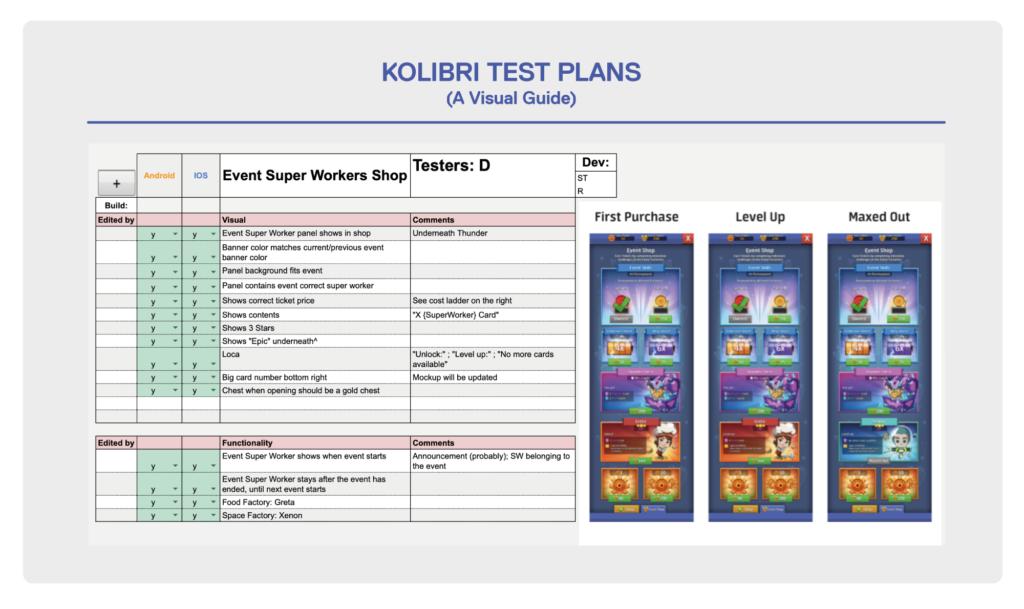

Our test cases are single sentences: “Do this, this happens”. There are no steps and no intended behavior (mockups go right next to our cases). A simple statement is enough to get the point across. In addition, it’s faster to split up the test case if you need several steps to complete it since our testers were part of the design process and already know what issue we’re trying to fix or what goal we’re trying to achieve with the feature. The flow of testing should not be disrupted by having to open specific cases and taking longer than a few seconds for reading testing steps.

Test map of a very popular Feature — The Event Super Workers. Notice the basic cases following a straight rhythm for testing.

Scripted and Exploratory Testing

Scripted testing is a necessary evil. Repeated tests like smoke tests are fastest and most efficient when they are written down (similar to our feature test plans that are closer to a “requirements sheet” that has a few edge cases, than a “traditional” test plan). Scripted tests are also better measurable compared to exploratory tests. We use a rotation system for repeated tests, so not every release is testing every important feature — instead, we rotate important cases to make sure we can cover more features during a cycle of three to four releases. Thanks to this approach, our scripted tests are fast and lean but keep a high level of coverage.

On the other hand, we consider exploratory testing — an incredibly underrated approach — the best way to find bugs. Every feature that hits QA is tested first without guidelines (apart from a basic design document), trusting the tester and their knowledge of the game. Historically, most issues are found during an exploratory test and it’s way quicker than writing extensive test plans and following test cases that are more detailed than most game design documents.

Our testing approach here follows a clear “keep it as close as possible to live” mentality, and thanks to our extensive testing setup in the game on the development branches (over 100 Cheats!) and our ability to load any player’s save game in this setup, it’s very easy for us to simulate any player’s progress.

The same mentality applies to our test session at the end of the sprint. Based on a few testing guidelines, Devs, Artists, Game Designer, and Product Management all get their hands on the new features in a 20 to 30-minute meeting and find the last issues or bring up last-minute changes. This is also a great opportunity to make sure that every team member plays the game and gets a feel for the new features.

Crash Tracking

We keep an eye out on all crashes and exceptions that occur while the version is live. We barely have any crashes thanks to a stable development process (let’s forget low-memory devices for a moment), but exceptions are a great indication for bugs and usually give enough hints which parts of the game are having issues. We track recurring exceptions and investigate new exceptions with every release.

Most of the time a new exception is a minor issue, easy to reproduce and fix, or an exception without user-facing problems coming from an SDK. For issues that require deeper digging, we can load player save games in our testing environment with more than 150 test devices and take a look at the logs from there, which is usually enough to find the issue.

Concluding Remarks

Our process is built in a way that avoids long tests (like performance) getting in the way of the sprint. Thanks to a clear integration of the QA Team, our communication with Developers, Design, and Product is super smooth, even when things get hectic.

We test as early as possible, use as much exploratory testing as possible, integrate stakeholders in the whole process, take ownership of the issues on live, and make scripted testing extremely lean. We don’t do hotfixes, because we focus on the critical area first, bring edge cases to the Dev Team before they start implementing the features, implement the rest of the Game Team in the QA process, while observing every exception, ready to fix it at any time before it escalates.

Product Management trusts us — we intentionally don’t do inefficient tests (like resolution testing), we wave a lot of our issues within QA, we take calculated risks knowing players might run into bugs, and only in a few rare cases does our testing slow down the sprint. Our first step to testing truly lean was to forget a lot of things that people taught us about QA.

One More Thing…

While I would like to highlight the success of our testing process, QA can’t claim all the credit alone: everything I write here is only possible because of a great company culture, a dedicated and efficient Development Team, hardworking Artists, super awesome Designers and a great set of Product Managers.

To a hotfix-free 2020!